Facebook Invented a New Language for Machines to Solve Complex Math Equations

Credit to Author: Kevin McElwee| Date: Wed, 15 Jan 2020 19:24:57 +0000

Despite being built by calculus, linear algebra, and an army of statisticians around the world, neural networks have trouble understanding math. Or at least, they have trouble understanding how humanity writes math equations. Facebook’s AI research team, however, claims to have developed a new approach to turn complex math problems into machine-readable data. And using the same kind of technology that translates English into Mandarin, they were able to translate problems into solutions.

The problem is two-fold: How do you explain a math problem to a statistical model, and more importantly, what’s the solution? Facebook’s breakthrough was treating variables and equations as parts of speech. Similar to diagramming a sentence, the program turns equations into a tree that can be read by a computer like any other language. There’s already an extensive amount of research in machine translation, so the Facebook AI team simply used a popular translation model to map problems to solutions.

“Our solution was an entirely new approach that treats complex equations like sentences in a language,” Facebook researchers wrote in a blog post explaining the technique.

Facebook limited its research to solving integration and differential equations, two areas of mathematics notorious for lengthy compute times and inexact solutions, but nevertheless essential to pushing forward research in countless subjects, like fluid dynamics and biological processes.

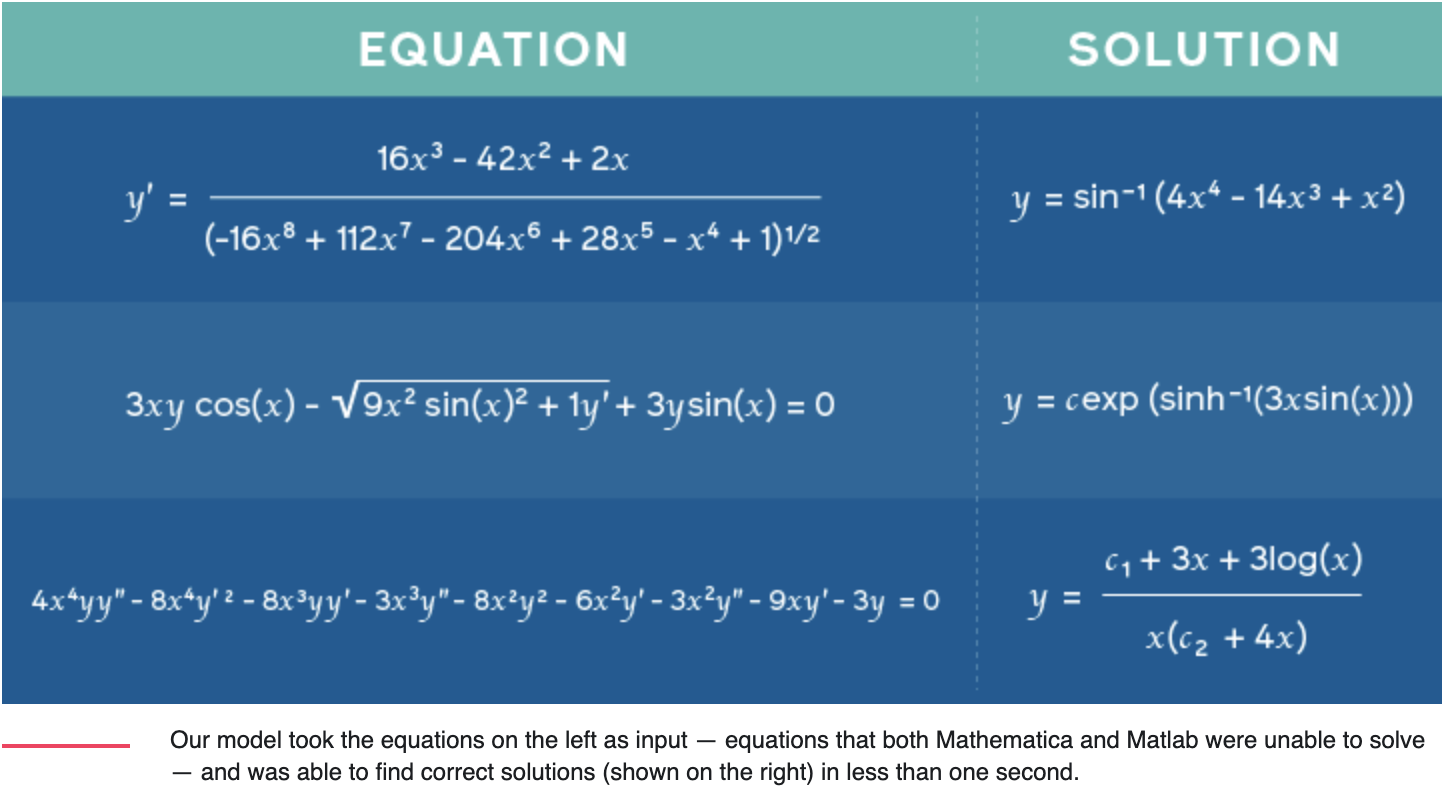

Facebook’s model significantly outperformed existing software. When posed with complex integration problems, for example, Facebook’s model achieved 99.7 percent accuracy compared to Mathematica’s 84 percent. Mathematica and Matlab, trusted commercial software that tackles similar problems, use line-by-line calculations to achieve solutions, albeit after lengthy runtimes. The advantage of a machine learning model is that once a neural network is trained, solutions are delivered almost immediately. Facebook listed multiple problems its model could solve in half a second for which Mathematica and Matlab took more than three minutes.

As with any neural network, the model’s output isn’t guaranteed to provide correct answers. Models are built on pattern recognition and approximation, so engineers should be cautious before launching a rocket based on results spat from a black box. Even if mathematical nonsense were fed into the model, it would still return a guess. Thankfully, however, the model’s output can be checked by traditional computational techniques. To grossly oversimplify, a computer can plug in values for x to check that its answers make sense.

Given its ability to outperform commercial software, the model probably found important rules and relationships in solving complex problems. But a severe drawback to this technology is that the model can never explain why or how it reached the result that it did. It’s a black box. Any mathematical revelations it found are locked within its neural layers.

The research also revealed a profound realization about machine learning in general. Fixing a simple communication error dramatically improved these models. Neural networks could always perform these advanced calculations; researchers simply needed to communicate better with their models. In its blog post, the Facebook team wrote “the perceived limitations of neural networks may be limitations of imagination, not technology.”

Similar to most machine learning research, the Facebook report was published for anyone to use, and may eventually find itself as part of Mathematica and Matlab’s software after passing more advanced scrutiny. While helpful for many in applied fields, math purists may still remain skeptical, perhaps for their own survival. Assuming Facebook’s claims pan out, neural networks are making a consequential step closer to regions of mathematics often reserved for theorists.

Kevin McElwee is a machine learning engineer and data journalist based in Princeton, NJ.

This article originally appeared on VICE US.