How Does Facial Recognition Technology Work? – 5 Real World Use Cases

Credit to Author: Bill Mann| Date: August 19, 2019

Facial Recognition systems are becoming ever more common. From airports to the streets of big cities to individual shops and apartment buildings, this tech is being applied everywhere. At the same time, there are functional and privacy concerns with these kinds of biometric identification systems.

In this article, we will look at the state of Facial Recognition technology today. We’ll cover the basics of the technology first, then look at some of the systems that are in use today. While things are moving fast in the field of Facial Recognition, by the end of the article, you should have a good sense of where things stand today, both the promise and the peril.

Before we get into the current uses, let’s go over how this incredible technology works.

While us humans recognize faces in by instantaneous pattern recognition, machines do it completely differently. Machines recognize and remember faces by taking all sorts of measurements of facial characteristics, then saving the resulting data as a representation of the face.

While there are variations in the tech, the basic process goes something like this:

- The system receives an image to analyze. This could be a still photo like those taken by systems at US airports, or a frame from a video of a person in motion. Some systems use techniques like infrared lidar and thermal imaging sensors to get additional information. Researchers are reportedly even working on systems that use sonar (like a bat or a submarine) to improve accuracy. As a result, the ‘image’ to be analyzed might actually be a set of images created with different types of sensors.

- The system searches for a face or faces in the image. It looks for characteristics that indicate the presence of a face, such as eyes, ears, nose, mouth, cheekbones. Once it identifies enough of these, the system has ‘found’ a face.

- The system measures various characteristics of the face. Some characteristics that might be used are the distance between your eyes, the depth of your eye sockets, the shape of your cheekbones, and the distance from your forehead to your chin. Newer systems generally consider many more characteristics than this, including things like skin color and texture. They can also adjust for variable lighting, a face that isn’t looking directly into the camera, and so on.

- The resulting data is stored as a representation of the face.

- The representation of the face is compared to a database of known faces.

- The system identifies the best match amongst the faces in the database and determines whether the match is close enough to be considered a hit. If there is not a match, the data may be stored to be matched with other personally identifiable data later, perhaps at the checkout counter in a store.

As you can imagine, the most advanced facial recognition systems are much more complex than this, but you now have a general idea of how the process works.

Modern Facial Recognition techniques are said to be more accurate than human recognition. However, at least some of the facial recognition systems in use now are pathetically bad at the job.

Maybe they are using older, less accurate technology (it can take years for a system to make it out of the lab and into the world). Or it could be that recognizing faces out in the real world is much harder than in the lab. Perhaps as Dr. WonSook Lee explains in the video, the systems that aren’t getting good results aren’t trained well enough.

Whatever the reason for the bad results some systems get, there is a huge amount of money and research going into this field, and it is certain that facial recognition systems will keep getting better and better.

One way to get a sense of where things stand is to look at some recent examples of the use of Facial Recognition Technology. In the vignettes that follow, we will look at several real-world applications of facial recognition technology and list their pros and cons.

Promise: More efficient policing.

Peril: Exposes innocent passersby to unwanted surveillance. Frequently generated false positives, resulting in an innocent person’s face being associated with a criminal’s records, at least temporarily. It caused confrontations between police and citizens who didn’t want to be scanned.

Until recently, the London Metropolitan Police has been testing its Live Facial Recognition (LFR) on the streets of London, making millions of innocent civilians part of the test without their consent. The system used cameras, facial recognition software, and an AI to compare the faces of passersby with those in a database of people wanted by the police.

If the AI detected a match, it would notify a nearby police officer. The officer would evaluate the information received from the LFR system and decide whether or not to approach and interrogate the person. The idea here is that even wanted persons need to go out into the world sometimes, and when they do, LFR could spot them and point the police right to them. This could be much more efficient than tracking down subjects the old-fashioned way.

However, the system has had many problems. One is the invasion of privacy caused by a system that at least momentarily generates and records Biometric data for every person passing in front of its many cameras. According to the LFR website,

“The system will only keep faces matching the watch list, these are kept for 30 days, all others are deleted immediately. We delete all other data on the watch list and the footage we record.

Anyone can refuse to be scanned; it’s not an offense or considered ‘obstruction’ to actively avoid being scanned.”

![]()

These guarantees ended up to not be very reassuring for a few reasons. First, the system has a tendency to make false-positive identifications. That is, it confuses innocent people for people whose faces are in its watch list. This results in innocent people being stopped and questioned by the police and having their identity stored in the LFR database for some amount of time.

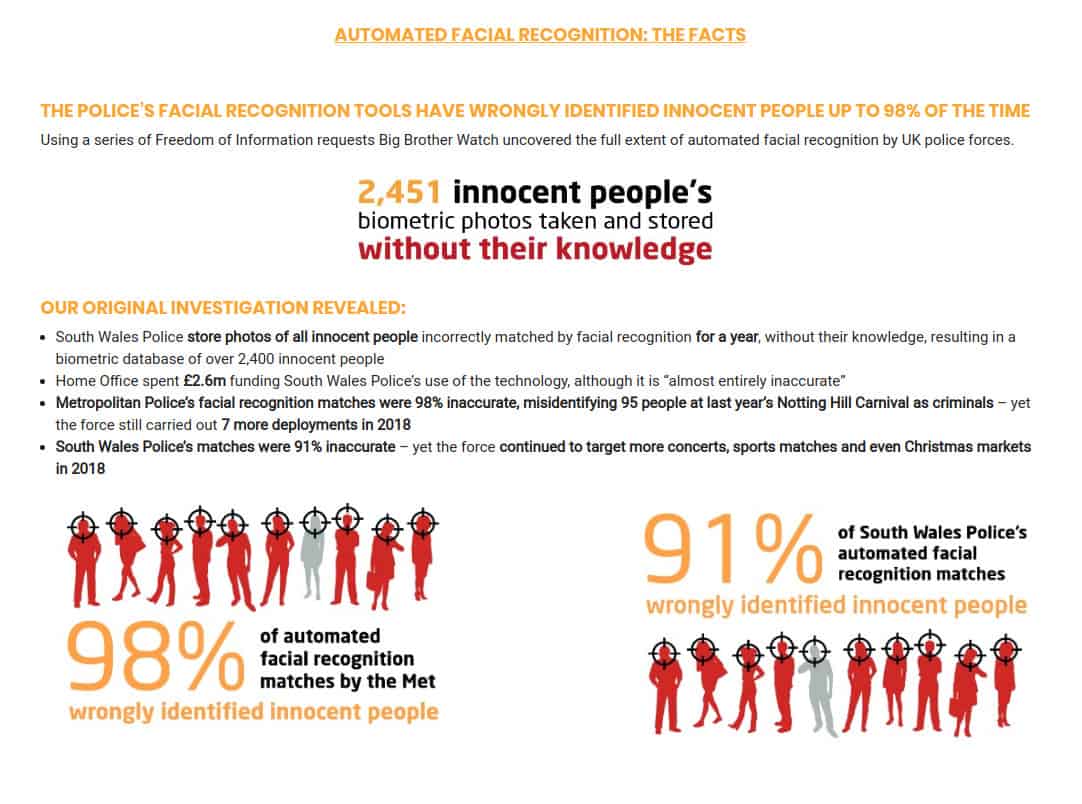

How inaccurate is the LFR system? Big Brother Watch, a UK privacy group, has a breakdown of the details here. Here’s a screen capture of the highlights from that breakdown:

As you can see, it seems the system wrongly identified innocent people the vast majority of the time. What happens in these cases? According to Big Brother Watch, when the system incorrectly identified people, their biometric data was still retained for a year, even though they had done nothing wrong.

Who has access to the biometric data from those innocent people? How is it used? How is it protected from hackers and other forms of misuse? We don’t know the answers to these questions.

Given that the system was so incredibly inaccurate, it is good to know that people were allowed to avoid being scanned. Backward from the perspective of privacy rights, but at least a way out. But the reality of the situation was very different.

As detailed in this article from the Mail Online, actively avoiding being scanned led to a passerby being forcibly detained and photographed so his face could be entered into the system. The Mail reported that the police finally confirmed that you could actively avoid being scanned. However, if you did actively avoid being scanned, they could consider that suspicious behavior, detain you, and force you to be scanned anyway.

As of the publication date of this article, the LFR system trials have ended. The police are now evaluating the results of the tests and deciding whether or not to deploy the system.

Promise: Rapid federal government detection of criminals using existing state records.

Peril: Possible violation of state’s rights. The United States Congress has not explicitly authorized this use of state databases.

ICE, the Immigration and Customs Enforcement agency of the United States, is in the news a lot today. Charged with securing the borders, among other things, ICE is the target of many groups opposed to US immigration policies. And its use of Facial Recognition technology recently made headlines.

ICE’s use of facial recognition technology to analyze driver’s license databases triggered reactions from many groups, including privacy advocates. There are several issues here. One is the conflict between the State’s Rights and the Federal Government. The US Congress has not approved this use of state motor vehicle records. However, approximately 20 states have approved the use of their databases by law enforcement agencies for years. This includes the FBI, which is a Federal agency.

The real problem seems to be the conflict over illegal aliens. Some of the states that give ICE access to their driver’s license databases have encouraged illegal aliens to obtain driver’s licenses. According to researchers at Georgetown University Law Center, ICE has sometimes targeted illegal aliens when scanning these databases. Whether looking for illegal aliens in these databases is a problem or not depends on where you stand on the immigration issue.

When questioned about the legality of these activities by National Public Radio (NPR) ICE stated the agency “will not comment on investigative techniques, tactics or tools.” They also point out that, “This is an established procedure that is consistent with other law-enforcement agencies.“

A more general privacy concern is that the FBI, ICE, and other agencies mine these databases for information. Few citizens are aware that getting a driver’s license exposes their information to Federal as well as State agencies. It isn’t clear which agencies have access to the data, nor what they are doing with it, and what happens to the data after it is “used.”

Promise: Faster, more efficient passage through US Customs.

Peril: False alarms can disrupt travel. A huge database of Biometric data is a great target for hackers. Data on 10’s of thousands of travelers has already been stolen.

Since 2017, U.S. Customs and Border Protection (CBP) has been using Facial Recognition systems at various entry points to the United States. According to Federal Computer Week (FCW), the system has caught multiple imposters trying to cross the border with false documents. The system compares the face of the person currently holding the document to stored biometric data previously associated with that document.

More recently, facial recognition tools are being used to identify people that are entering and leaving the country at multiple airports. And the agency plans to expand its use of the technology greatly.

This comes despite growing public opposition to the use of facial recognition technologies. Civil libertarians and privacy advocates see large future risks from the deployment of these systems.

According to the Electronic Privacy Information Center’s United States Visitor and Immigrant Status Indicator Technology (US-VISIT) page,

![]()

As with other systems we’ve looked at, it is unclear who exactly has access to your biometric data, and what they are doing with it.

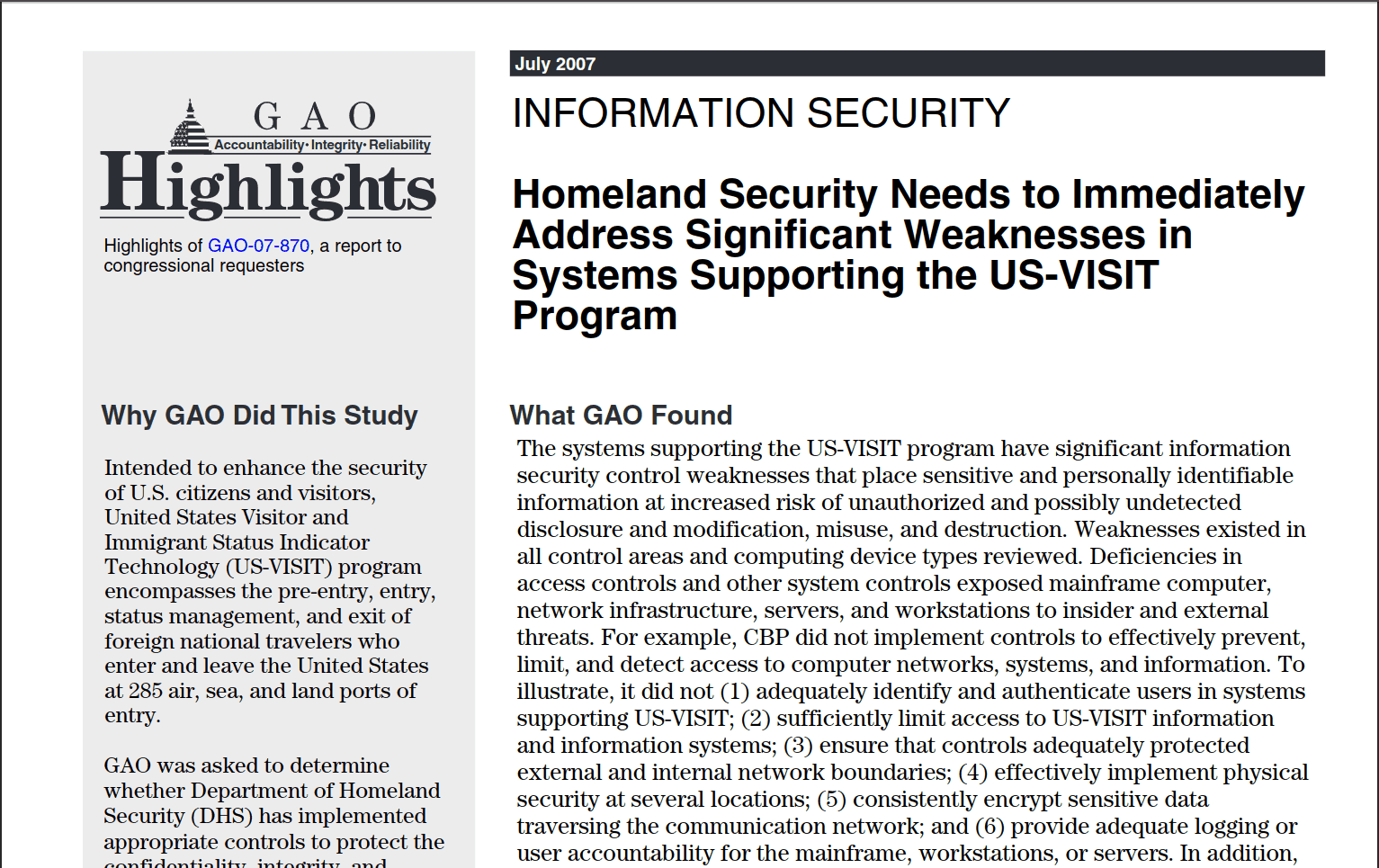

In addition, there are concerns with the security of the biometric and other personal information gathered by the system. As far back as 2007, the GAO found major problems with the computer systems supporting US-VISIT:

Note that in 2013, US-VISIT was renamed the Office of Biometric Identity Management (OBIM). It is unclear to us whether all the problems in the government’s computers have been resolved. But even if their systems are 100% secure (no computer system is 100% secure), there are other players involved here.

One website reported that biometric data on tens of thousands of travelers was stolen in a data breach. In May of 2019, CBP learned that a subcontractor had transferred data (license plates and traveler images) to their own computers. This kind of transfer is forbidden by CBP rules but was apparently done in defiance of those rules.

That data was then stolen from the subcontractor. According to comments from CBP, data for less than 100,000 travelers was stolen. While CBP would not disclose the name of the subcontractor at fault, sources say that shortly after the hack, The Register discovered a site on the dark web containing hundreds of gigabytes of license plate images and other data, available for free to anyone who wants it.

Promise: Automated tagging of users in photos.

Peril: Users claim Facebook recorded biometric data without their consent and sued. It creates a huge database of biometric data for hackers to target. Google, Apple, Microsoft, and others are doing the same. It is unclear what is done with the data and who else has access to it.

The things social media sites can do these days are incredible. One of their cool tricks is automatically tagging people in the photos you post. As you are probably aware, they apply Facial Recognition technology to get this done.

But not everyone is thrilled with this particular capability. As a result, a group of Facebook users launched a class-action lawsuit challenging Facebook’s use of their biometric data. Earlier this month, the U.S. Court of Appeals for the 9th Circuit in San Francisco ruled 3-0 that the case can proceed despite Facebook’s attempt to block it.

The case was started by three Facebook users from Illinois, where state law prohibits companies from collecting and storing biometric data without the user’s written consent.

Judge Sandra Ikuta wrote that “…the development of a face template using facial-recognition technology without consent (as alleged in this case) invades an individual’s private affairs.”

While it will probably be years before this case is closed, the results could help clarify what rights users have to their own biometric data.

Promise: Ads that are targeted to the viewer’s age, gender, and mood will be more effective.

Peril: Once this tech is widely deployed, it is a small step to full facial recognition and private surveillance of your activities.

Using a system created by the French company Quividi in 2015, some shoppers at upscale malls seem to be living in a science fiction movie. When they visit one of more than 40 Westfield shopping centers, they are surrounded by hundreds of smart billboards that tailor advertisements to be most effective on them individually.

The billboards have cameras feeding a Facial Analysis (not Facial Recognition) system that can identify characteristics like your age, gender, and mood. The company stresses that the system does not do Facial Recognition, meaning it doesn’t know that it is you, Jane Doe, from Perth looking at the billboard. The cameras instead record blurry images that are sufficient for the required analysis but not for Facial Recognition.

The system can determine characteristics such as the person looking at this particular billboard is likely:

- Female

- Age 30 to 35

- Is very happy right now

Given this information, the system displays ads that are tailored to appeal to a person with these characteristics. That’s a little bit creepy. Even more creepy is that the system records the person’s reactions to the ads and sends the data to the relevant advertisers. This lets them tweak their ads to be even more effective at separating each shopper from their money.

According to this article in the Guardian, the system’s accuracy ranges from around 90% for gender to about 80% for mood.

The privacy concern here is that next step from facial analysis to analysis plus recognition. Few of us are likely to want stores recording where we are, what we buy, what mood we are in, how we react to specific ads, and who knows what else a combined system could learn about us.

According to the article, other stores are further along the facial recognition path than Westfield. They say that chains like 7-Eleven, Target, and Walmart are all experimenting with using facial recognition tech in their stores. In 2018, a company called FaceFirst launched a system called Fraud-IQ, which uses facial recognition technology to scan for known shoplifters and people abusing store return policies.

Another system is called Brain by DeepCam. This system uses facial recognition to spot people who have been added to the store’s database of problem visitors to identify those who need to be intercepted by security.

Both FaceFirst and DeepCam market their services to US retailers. While it is unclear which companies will supply the systems, and how soon your every move will be monitored, at some point it’s likely there will be by AIs connected to facial recognition systems in stores.

In 2018, the American Civil Liberties Union (ACLU) questioned 19 of the top American retailers, plus Disney, about their use of facial recognition technology. Only one of the companies (Lowes) said it did use the technology, and only one (Ahold Delhaize, which owns several supermarket chains) said it did not use facial recognition technology.

The other 18 refused to confirm or deny the use of facial recognition technology. Maybe we are paranoid, but these non-answers seem to imply that the companies either are now or will soon be using the technology.

As we’ve seen, facial recognition systems are real, and they are deployed in the world. This is true despite their imperfections and the real privacy threats they embody.

One particular concern that I don’t believe has been getting enough attention is what happens when (not if, when) some database containing your facial characteristics or other biometric data gets hacked. This is your face we’re talking about, not some 8-character password. If biometric data gets stolen, you are in deep trouble. What are you going to do, get surgery to change the shape of your face?

Facial Recognition systems are here, and they aren’t going away. They are instead spreading with little or no control. If these systems are going to be practical, hopefully there will be some serious discussions of topics like when and where is it appropriate to use this technology. And as a result, strict rules on who controls your biometric data, how it can be used, and how it can be stored safely.

- How does Facial Recognition work?

- What is Infrared Lidar?

- How does Sonar work?