Twitter Has Started Researching Whether White Supremacists Belong on Twitter

Credit to Author: Jason Koebler| Date: Wed, 29 May 2019 19:46:01 +0000

Twitter is conducting in-house research to better understand how white nationalists and supremacists use the platform. The company is trying to decide, in part, whether white supremacists should be banned from the site or should be allowed to stay on the platform so their views can be debated by others, a Twitter executive told Motherboard.

Vijaya Gadde, Twitter’s head of trust and safety, legal and public policy, said Twitter believes “counter-speech and conversation are a force for good, and they can act as a basis for de-radicalization, and we’ve seen that happen on other platforms, anecdotally.”

“So one of the things we’re working with academics on is some research here to confirm that this is the case,” she added.

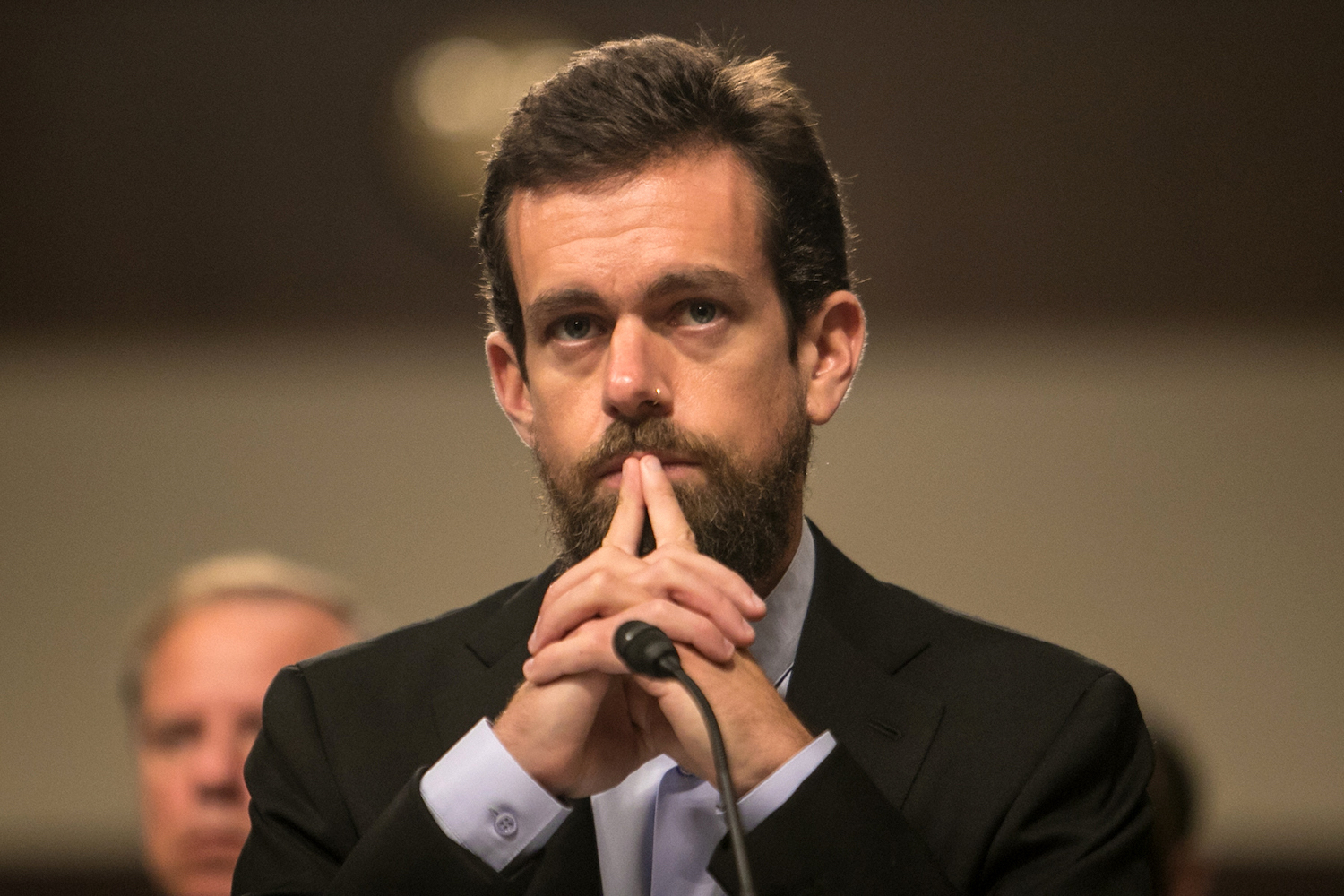

Gadde, who, along with Twitter CEO Jack Dorsey, met with President Trump to discuss the “health of the public conversation” on Twitter last month, said Twitter is working with external researchers on the work, but declined to name them, and added that the researchers are under non-disclosure agreements (NDAs).

“We’re working with them specifically on white nationalism and white supremacy and radicalization online and understanding the drivers of those things; what role can a platform like Twitter play in either making that worse or making that better?” she said.

“Is it the right approach to deplatform these individuals? Is the right approach to try and engage with these individuals? How should we be thinking about this? What actually works?” she added.

Do you work at Twitter? Did you used to? We’d love to hear from you. You can contact Joseph Cox securely on Signal on +44 20 8133 5190, Wickr on josephcox, OTR chat on jfcox@jabber.ccc.de, or email joseph.cox@vice.com.

Motherboard spoke to several academics who have studied Twitter and radicalization online. While most of them said that they are glad Twitter is finally thinking about the issue of white supremacists on the platform, several said they believe the company should be constantly researching these issues, and should have started long ago.

When Motherboard described Twitter’s plans on the phone, two of the academics laughed before responding.

“That’s wild,” Becca Lewis, who researches networks of far right influencers on social media for the nonprofit Data & Society, said. “It has a ring of being too little too late in terms of launching into research projects right now. People have been raising the alarm about this for literally years now.”

“I mean, these quotes are a disaster, I’m going to be honest,” Angelo Carusone, president of Media Matters, a progressive group that studies conservative disinformation, said. “The idea that they are looking at this matter seriously now as opposed to the past indicates the callousness with which they’ve approached this issue on their platform.”

Other experts Motherboard spoke to acknowledged this is a complicated problem.

“I’m glad to hear that they’re doing their due diligence and consulting experts on this complex matter,” Jillian York, director for international freedom of expression at the Electronic Frontier Foundation said in an online chat. “Powerful (extremist or otherwise) actors will always find another way to get their word out, and we know from experience that speech restrictions (including of extremist content) all too often catch the wrong actors, who are often marginalized individuals or groups. And so I’m glad to see Twitter thinking creatively about this problem.”

Twitter has faced repeated criticism for not taking a more aggressive approach towards banning white nationalists. In April, British MP Yvette Cooper asked Twitter during a parliamentary committee hearing why the network had not banned former KKK leader David Duke.

“Is it the right approach to deplatform these individuals?”

After a Motherboard investigation, Facebook changed its policy and now bans white nationalism, white separatism and white supremacy. The reason for the switch, Facebook said at the time, was because white nationalism is linked to organized hate.

After that policy change, neither Twitter or YouTube would commit to making the switch, but Twitter said that it bans “hateful conduct” and “abusive behavior.” Twitter did not say when the study might be completed, whether it would be released to the public, or whether the company plans to make policy changes when it’s done.

Twitter has long taken a much more expansive view of what should be allowed on its platform than other social media companies. The company has long believed that good speech can counteract bad speech: “We are the free speech wing of the free speech party,” a company executive famously said in 2012.

Heidi Beirich, director of the intelligence project at the Southern Poverty Law Center, said, “Twitter has David Duke on there; Twitter has Richard Spencer. They have some of the biggest idealogues of white supremacy and people whose ideas have inspired terrorist attacks on their site, and it’s outrageous.” She added that “there’s no question we already have that evidence” to suggest that white supremacy is a problem on Twitter.

The idea that “counter-speech” can counteract white supremacy specifically on Twitter is also one that academics are skeptical of. While it’s appealing to believe that white supremacists can be convinced to not be racist, both Lewis and Carusone said that Twitter’s platform makes that very unlikely.

“Counter-speech is really appealing and there are moments when it does absolutely work, but platforms have an ulterior motive because it’s a less expensive and more profitable option,” Lewis said. “When you’re talking about counter speech, what that is assuming is an environment where people are generally interested in engaging in good-faith conversations and having their minds changed. That is often extremely far from the case on Twitter, where networked harassment campaigns are common, with white nationalists often take part in those campaigns.”

Carusone added that he believes that “fundamental cheating” on Twitter makes it an uneven playing field: “If Twitter hasn’t solved brigading, bots, and sock puppets, the counter speech never has a chance to air itself out.”

“Is the right approach to try and engage with these individuals?”

Deplatforming, in which Twitter would ban white supremacy and nationalism altogether, is a method advocated by some experts, but detecting and removing white supremacy isn’t always straightforward, and, as Motherboard reported earlier this year, is more difficult to do algorithmically than banning terrorist content. And then there’s the question of where they go after they’re banned: “It’s important that the research is being done, but these companies need to calculate their response to online hate and extremism by also considering their place in the broader ecosystem,” Oren Segal, director of the Anti-Defamation League’s Center on Extremism said.

Twitter deploys a more multifaceted approach to content moderation than simply allowing accounts to stay online or banning then. The network may downgrade certain replies making them not immediately visible to a user. But generally speaking, the material, and the users, are still there.

“Providing transparency into what is going on in the world is really important, and that hiding things doesn’t make them automatically disappear,” Gadde said.

Subscribe to our new cybersecurity podcast, CYBER.

This article originally appeared on VICE US.