‘They Would Go Absolutely Nuts’: How a Mark Cuban-Backed Facial Recognition Firm Tried to Work With Cops

Credit to Author: Joseph Cox| Date: Mon, 06 May 2019 18:49:26 +0000

Facial recognition technology is becoming more common across the United States, for both law enforcement and private companies. Now, emails obtained through a public records request provide insight into how facial recognition companies attempt to strike deals with local law enforcement as well as gain access to sensitive data on local residents.

The emails show how a firm backed by Shark Tank judge, Dallas Mavericks owner, and billionaire entrepreneur Mark Cuban pushed a local police department to try and gain access to state driver’s license photos to train its product. The emails also show the company asked the police department to vouch for it on a government grant application in exchange for receiving the technology for free.

“Chief, you seemed pretty keen on the use of facial recognition in stadiums. If you know of any place to start, please let me know,” a 2016 email from Jacob Sniff, a co-founder of facial recognition startup Suspect Technologies, addressed to Michael Botieri, chief of the Plymouth Police Department in Massachusetts, reads. Cuban, who invested in the company that same year, also co-led and closed an $810,000 round of investment into the firm last December. Cuban has used the company’s technology in the Mavericks’ locker room.

In the emails, Sniff repeatedly asked Botieri to deploy the technology in his district to help improve the product. Sniff mentioned plans for the technology to search through results for people of a particular gender or ethnicity, and deploy “emotion recognition.”

“I’m not involved in their day to day operations but my guess is that they were looking to acquire data sets to train models,” Cuban told Motherboard in an email, referring to the attempt to gain drivers’ photos from the state Registry of Motor Vehicles.

Kade Crockford, director of the Technology for Liberty Program at the ACLU of Massachusetts, who provided the emails to Motherboard, said, “They reveal that self interested technology vendors are working behind the scenes to push unreliable, invasive surveillance tools on unsuspecting communities, entirely in the dark.”

Botieri did not respond to multiple requests for comment.

Do you know anything else about facial recognition technology, or who is buying it? You can contact Joseph Cox securely on Signal on +44 20 8133 5190, Wickr on josephcox, OTR chat on jfcox@jabber.ccc.de, or email joseph.cox@vice.com.

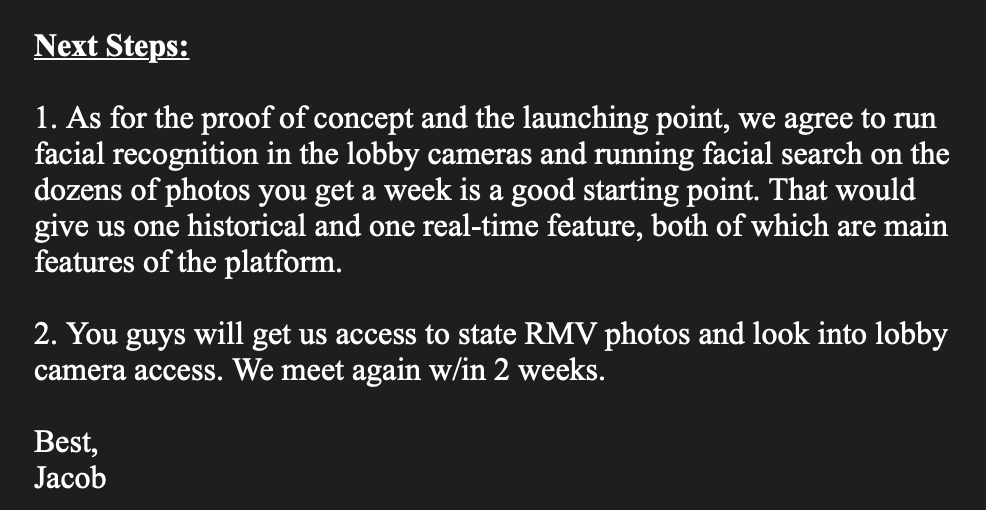

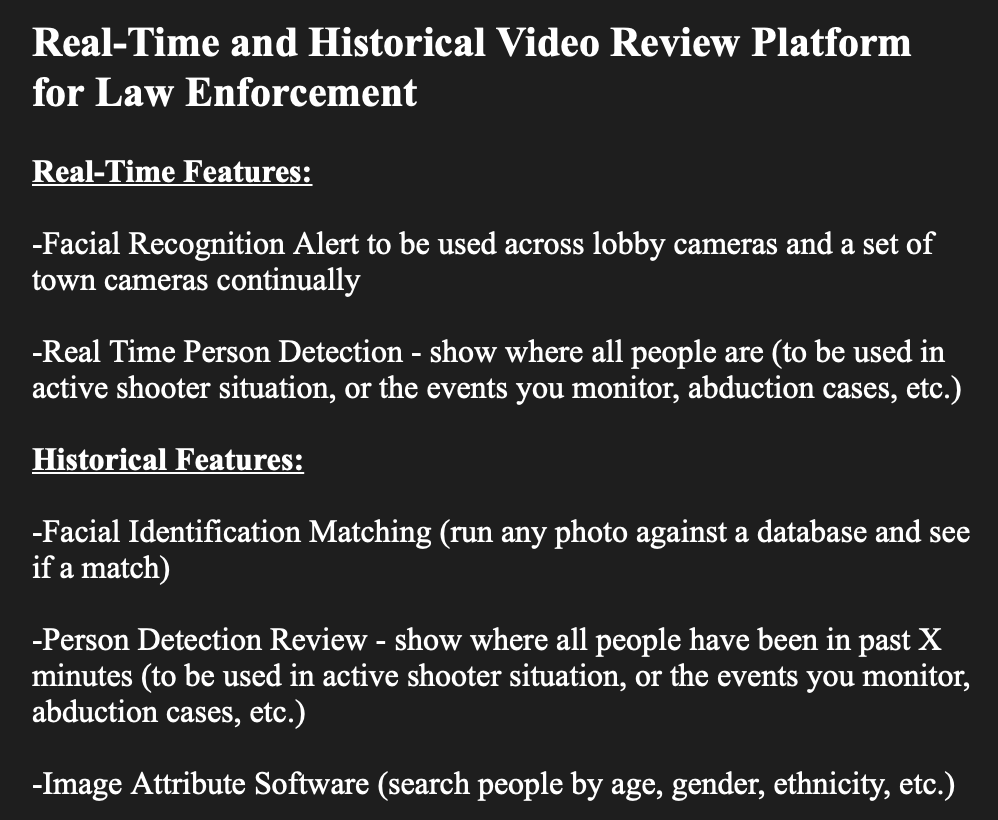

As for Suspect Technologies’ pitch, one January 2018 email describes a facial recognition system with various features that the company could implement at a future date. It would be set up on cameras in police lobbies and across town with “Real Time Person Detection,” which would “show where all people are (to be used in active shooter situation, or the events you monitor, abduction cases, etc.)” Another set of features would be focused on historical data, which could “show where all people have been in past X minutes,” and “Image Attribute Software (search people by age, gender, ethnicity, etc.)”

Sniff’s emails show that he knew facial recognition technology is a tumultuous subject.

“So you would aim to do this on all or most of the buildings you showed me in person? We would be fine on the privacy concerns for this?” Sniff wrote in a November 2017 email to the police department. “I do realize the technology could be perceived as controversial, though the stark reality is that it could save lives.”

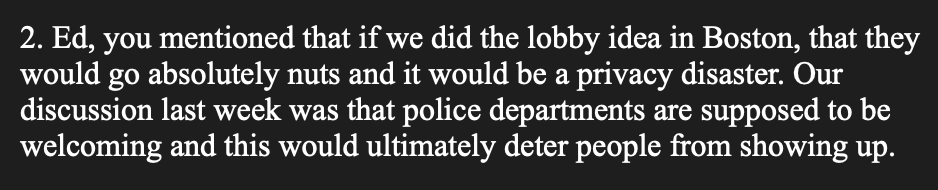

“Ed, you mentioned that if we did the lobby idea in Boston, that they would go absolutely nuts and it would be a privacy disaster. Our discussion last week was that police departments are supposed to be welcoming and this would ultimately deter people from showing up,” Sniff wrote in an April 2018 email chain including Ed Davis, former Boston Police Commissioner and who now runs a security consulting firm.

Davis added, “I also know that if I tried to implement this system in Boston I would be run out of town by the liberal activists and privacy zealots, to say nothing of the Boston Globe and their advocacy for undocumented immigrants.”

Davis told Motherboard in an email “Technology is moving faster than societies [sic] capacity to debate important privacy implications. I hope my unrefined comments drove home the importance of these concerns to the brilliant technology entrepreneur who asked for my input. We are all trying to navigate this important space.”

Sniff asked Chief Botieri to sign a letter helping Suspect Technologies receive a grant from the National Institute of Standards and Technology (NIST), according to a January 2017 email. Sniff offered to give the police department the facial recognition technology for free in exchange for signing the letter.

“Of course, we’d offer the technology to you guys eventually for free when it’s ready :),” Sniff wrote. The next month Botieri sent the signed version, another email shows.

In a December 2017 email, one of several where Sniff sent Botieri news articles related to facial recognition, Sniff highlighted how police in China are using similar technology in police stations to speed up administration functions. In another later email, Sniff sent Botieri a link to a news report about the Parkland, Florida mass shooting, and asked “Don’t you see how facial alerts could be pretty valuable at main entrances?”

In the emails, Sniff asked about gaining access to the RMV database, as well as others, and indicated that Botieri said it might be possible.

“Could we reasonably gain access to MA RMB [RMV] databases or other similar databases in MA?” Sniff asked. “I know we discussed this at meeting [sic] and the consensus was yes. I guess I’m wondering how long would this take?”

“It’s obviously going to have to be a combination of really good tech (which we should be there soon) and also a decent database that we can go off from,” he added.

“You guys will get us access to state RMV photos,” another later email reads, under the heading “Next Steps.”

Sniff told Motherboard in a phone call that Suspect Technologies tried to get access to the RMV database because “One thing our company wants to do is make unbiased recognition, so if we can have a larger training set to train and test on, it allows us to build better, unbiased recognition technologies.”

In an email to Motherboard last week, Judith Riley from the Massachusetts Department of Transportation Communications Office, wrote, “The RMV does not provide access to its image files or facial recognition technology to any commercial third parties.” The RMV does have its own facial recognition program which alerts police when people try to obtain multiple driver’s licenses.

Sniff told Motherboard his company has one official client and a number of beta testers for the facial recognition product. He said Suspect Technologies also has around 200 law enforcement clients for its separate video redaction system, which he said is designed to protect privacy of, say, bystanders in video. Sniff said “we’re still trying there, to be clear,” referring to implementing technology with the Plymouth Police.

Crockford from the ACLU of Massachusetts told Motherboard “It’s disturbing that Suspect Technologies would suggest police in Massachusetts use face surveillance software in public buildings, including schools and even float the idea of using the software to track people by their race and gender.”

Sniff told Motherboard he didn’t specifically remember mentioning ethnicity scanning as a feature, but did say investigators “may be looking for a dark skinned person, or a white skinned person, or an Asian skinned person. It’s the first […] classifier of data.”

“You guys will get us access to state RMV photos.”

Multiple studies from MIT Media Lab found that facial recognition systems from IBM, Microsoft, Amazon and Chinese firm Megvii, had a harder time correctly identifying the gender of darker skinned and female faces. Microsoft and IBM said in previous statements that they improved their own datasets to address this issue.

Cuban told Motherboard in an email “there is quite a bit of research related to determining bias in models and again, the only way to determine bias is to do robust bias testing and review for it.”

The Suspect Technologies and Plymouth Police deal didn’t work out. In a June 2018 email, Botieri wrote that he doesn’t have the money budgeted for the proposal.

“Say hello to Cuban for me,” Botieri wrote in a January 2018 email.

Subscribe to our new cybersecurity podcast, CYBER.

This article originally appeared on VICE US.